Over the years we have made improvement to our analytics data collection and optimising data upload over our AWS to BQ via GCS since we added our streaming ELT based pipeline.

We have over 2 dozen types of events that are captured to provide data to be analysed to generate value for business to design and take decisions over user experience. Before we had our stream pipeline in place when a new event got added to the stack or a current event was updated it would require the data to be uploaded to BQ the following hour to be validated for correct implementation for launch. As events are fired over different platforms i.e mWeb, dWeb, iOS, Android the number of combination are in thousands over how the events are fired. This caused an issue as most of the time it was too late before anything was noticed by the business or project managers over the launched event by specific team implementing it to know something wasn't right or missing in the implemented details. After having the stream pipeline in place from hours we moved to a sub 2 minute interval of providing data. That should have been enough but it was technically cumbersome as not only were the QA overwhelmed by the amount of data but also to check if the event logged in its full form is valid required access to BQ.

To make things relevant we decided to test event stream on our staging server in parallel to it being logged. Our staging analytics is identical to production, as should be the case if we are to make sure what ever is shipped by the relevant team will remain consistent when they launch their implementation to production. As we have already separated our logging to the web request processing part now was to run some ruby magic to run automated test on fields within an event independently of logging. So we wrote some meta-programming based code that would fire implemented code for fields. For an event if we have a nested field for e.g.

{"client" : {"user": {"id": "user-1", "name": "User 1"}}}

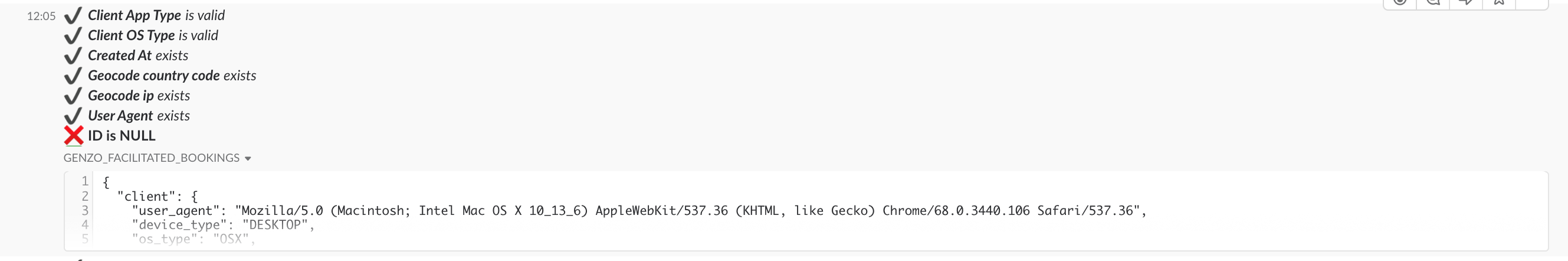

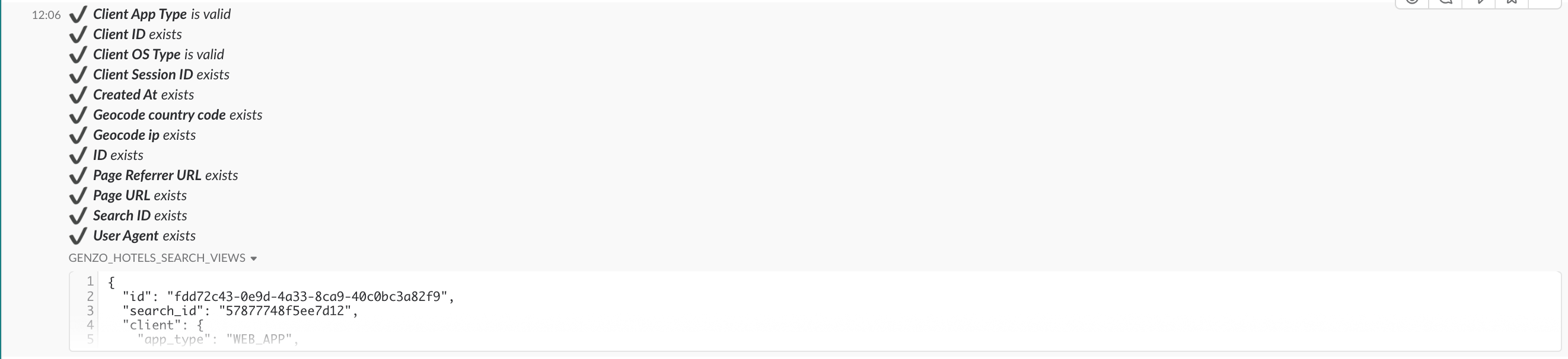

we would have 2 test cases fired for this client_user_id and client_user_name (implementation needs to be present). Each object field is parsed concurrently and all test are also run concurrently. Once we had this working now was the turn to report this to the QA and the teams to view their event for validation. For this purpose we hooked the test results to Slack. This gave us the flexibility to report back the collected event and the test report as well indicating if all fields were valid in a particular event or something failed.

Now the events are tested live on Analytics event stream and are searchable and can be marked with feedback over the msgs and shared for any issues. At times it is not enough to test code that logs data, the data is to be tested as well.

View Comments